Is it cold outside? Part 1

This site isn’t always going to be about food - I have a couple of other projects that I do some work on that I’ll also document. For me, the hardest part of of a project is generally coming up with the initial idea - once I think of something at could be done programmatically, then all the pieces start to fall into line. I hope by writing up some of these thoughts, I can encourage other people to do the same and help me when my creativity lapses!

I had the thought not too long ago - my apartment seems pretty hot, what if it’s cooler outside and I’m wasting power? That started the gears turning - surely there’re websites that track outside temperatures? Or sensors I can use to monitor temperatures? Aaaand down the rabbit-hole I go.

After some investigation, I came up with a basic plan - my raspberry pi with a temperature sensor for internal readings (the DHT11 looks like it will work) and I can access a website for outside readings in my area. I also want to be notified of these changes so I’ll need a push notification service like pushover. Now it’s time for me to get started.

Setting up the Pi

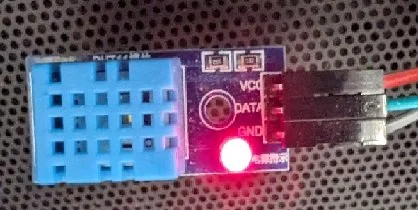

Now that I had a sensor, I had to wire it to the board.

The sensor has 3 nodes: VCC (power), DATA, and GND (ground)

I used Pinout to work out which pins to use on the pi - the documentation said it uses ‘3v3’ power, I set GND to a grounding pin, and I connected DATA to pin 11 (GPIO 17).

I then got to work writing a python script that was able to read data from the sensor - luckily Adafruit has guides on accessing the output that I could look at.

import Adafruit_DHT # Adafruit's library for these sensors

sensor = Adafruit_DHT.DHT11 # Specifies the sensor type

pin = 17 #Specifies the DATA pin

try: # will attempt to read the sensor data

humidity, temperature = Adafruit_DHT.read_retry(sensor, pin)

print("Humidity:", str(humidity))

print("Temperature:", str(temperature))

except:

print("error")

After a bit of trial and error I got this together - managing to print out the current temperature and humidity. I didn’t output humidity because I’m going to use it (I don’t have a use for the humidity at this point), the Adafruit library for this sensor checks and outputs both as a tuple. If I’d written only one variable, then I’d have to specify which element of the tuple I wanted, as below (noting that python numbering starts at zero)

output = Adafruit_DHT.read_retry(sensor, pin)

print("Humidity:", str(output[0]))

print("Temperature:", str(output[1]))

Finding weather data

And now with that, I have a functioning temperature sensor, so I’ll do some research into weather data before wrapping up. Here in Australia we have the Bureau of Meteorology (BoM) and it has a lot of great information available, so hopefully if you’re not in Australia then your friendly neighbourhood government meteorological organisation serves up the same level of information.

After a bit of poking around I found that I can look up min/max temperatures using this URL format:

http://www.bom.gov.au/[state]/forecasts/[area].shtml

While searching for an API for weather observations, I found the Weather Data Services page with links to the current temperature readings (to the last 10mins) under “Observations - individual stations”. I actually went deeper to the individual weather station which gives a lot more specific information for my area. It’s always a good idea to search for an API before starting to scrape a website - websites generally aren’t designed with scraping in mind so it might be easier for you to work with pre-formatted data while also reducing the strain on the website’s resources - win-win! Another step you should take is to check the robots.txt - the ‘rules’ for scraping a website. I say ‘rules’ because they’re not really enforced - they’re meant to indicate how the website owner wants crawlers to interact with their site - but if you flaunt the rules, then they’ll start locking things down and you’ll ruin it for everyone, so let’s not do that.

Going through the rules, I don’t see any rules I’d possibly break so I’ll explain & demonstrate my general go-to rules of scraping:

1. Always set a user-agent

The user-agent basically identifies who/what is accessing a site, indicating the tool/browser so the website can provide the best experience. The default user-agent of the python requests library states that it’s python accessing the page which can be an easy way to identify/block bot activity. Just search online for an example of user-agent and use that.

2. Test on cached pages

It’s really tempting to download a copy of the page, parse it, test information extraction, download a copy of the page, etc. in a vicious cycle. This sort of repeated activity can also lead to IP bans from proactive servers, but is at least rudely chewing up resources so we’ll work to avoid it. By outputting the results of your first scrape to a file and then testing on that output, you’re able to test to your heart’s desire!

import os.path

import requests

from bs4 import BeautifulSoup

header_list = {'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:12.0) Gecko/20100101 Firefox/12.0'}

url = 'www.google.com'

cache = 'cache_file'

if os.path.isfile(cache) == False:

result = requests.get(url, headers=header_list)

with open(cache, 'wb') as outfile:

outfile.write(result.content)

with open(cache, 'rb') as infile:

soup = BeautifulSoup(infile, 'html.parser')

See here how I’ve specified the user-agent? And before I use requests to scrape the webpage, I check to see if it’s already been done before starting? If the cache file exists, then the script will just read/parse that - if I want a refreshed page then I can just delete the cache, but this script can now run offline after the first run. BeautifulSoup is a great HTML parser that I’ll explain in more detail in the next entry of this blog series.

Ok! Now I have the basic building blocks:

The sensor is set up

I’ve identified the data I need

I have a good way to test my scraping

In my next blog on this topic I’ll cover push notifications and then I’ll put it all together!